Challenge Yourself with the World's Most Realistic SPLK-1003 Test.

What action is required to enable forwarder management in Splunk Web?

A. Navigate to Settings > Server Settings > General Settings, and set an App server port.

B. Navigate to Settings > Forwarding and receiving, and click on Enable Forwarding.

C. Create a server class and map it to a client inSPLUNK_HOME/etc/system/local/serverclass.conf.

D. Place an app in theSPLUNK_HOME/etc/deployment-appsdirectory of the deployment server.

Explanation:

To enable Forwarder Management in Splunk Web, which is used to manage deployment clients (usually Universal Forwarders) via a Deployment Server, you must:

Have at least one app placed inside the $SPLUNK_HOME/etc/deployment-apps directory on the deployment server.

This action enables the Forwarder Management interface in Splunk Web. Without any apps in that directory, the Forwarder Management UI will not be available, even if the instance is configured to act as a deployment server.

🔍 Additional Details:

The Deployment Server manages deployment clients using server classes and apps.

When you place an app into the deployment-apps directory, the Splunk instance recognizes that it's managing clients, which triggers the UI to display the Forwarder Management page.

📘 Splunk Docs Reference:

Official Splunk Documentation - Use forwarder management:

"To enable forwarder management in Splunk Web, you must first create at least one app in the deployment-apps directory."

Forwarder Management - Splunk Docs

❌ Why the other options are incorrect:

A. App server port relates to web UI settings, not forwarder management.

B. "Enable Forwarding" is for configuring outputs.conf to send data, not for managing forwarders.

C. serverclass.conf is necessary but not sufficient on its own to enable the Forwarder Management UI.

Which Splunk component requires a Forwarder license?

A. Search head

B. Heavy forwarder

C. Heaviest forwarder

D. Universal forwarder

Explanation:

Heavy Forwarder requires a Forwarder License because it performs additional processing (e.g., parsing, filtering, and routing) before forwarding data.

Universal Forwarder (UF) (Option D) is license-free—it does not consume a license because it only forwards raw data.

Search Head (Option A) and "Heaviest Forwarder" (Option C, which is not a real Splunk component) do not require a Forwarder license.

Key Points:

Forwarder License is needed for Heavy Forwarders, not Universal Forwarders.

Splunk licenses data based on indexed volume, but forwarder licensing is specific to the type of forwarder.

An admin is running the latest version of Splunk with a 500 GB license. The current daily volume of new data is 300 GB per day. To minimize license issues, what is the best way to add 10 TB of historical data to the index?

A. Buy a bigger Splunk license.

B. Add 2.5 TB each day for the next 5 days.

C. Add all 10 TB in a single 24 hour period.

D. Add 200 GB of historical data each day for 50 days.

Explanation:

Splunk’s license enforcement is based on the peak daily indexing volume within a 24-hour rolling window (not a strict calendar day). Here’s why Option C is correct:

Current License Capacity:

The license allows 500 GB/day.

Current daily indexing = 300 GB/day (leaving 200 GB unused).

Adding Historical Data:

If you ingest 10 TB (10,000 GB) in a single 24-hour window, the total indexed volume for that period would be:

. 300 GB (new data) + 10,000 GB (historical) = 10,300 GB → Exceeds license limit (violation).

But: Splunk allows license violations for short bursts (up to 3x the licensed amount for brief periods).

. 500 GB x 3 = 1.5 TB is the "soft" limit, but exceeding this briefly won’t immediately break indexing.

. After ingestion, the next day’s volume returns to normal (300 GB/day), avoiding prolonged violations.

Why Not Other Options?

A (Buy a bigger license): Unnecessary for a one-time historical load.

B (2.5 TB/day for 5 days): Exceeds license daily (2.5 TB + 300 GB = 2.8 TB/day → 5.6x over license).

D (200 GB/day for 50 days): Safe but too slow (historical data may be needed urgently).

Key Takeaway:

Splunk’s licensing prioritizes short-term bursts over sustained violations.

Best practice: Ingest large historical datasets all at once (outside normal indexing), then return to compliance.

Reference:

Splunk Docs: License violations

A security team needs to ingest a static file for a specific incident. The log file has not been collected previously and future updates to the file must not be indexed. Which command would meet these needs?

A. splunk add one shot / opt/ incident [data .log —index incident

B. splunk edit monitor /opt/incident/data.* —index incident

C. splunk add monitor /opt/incident/data.log —index incident

D. splunk edit oneshot [opt/ incident/data.* —index incident

Explanation:

The correct answer is A. splunk add one shot / opt/ incident [data . log —index incident According to the Splunk documentation1, the splunk add one shot command adds a single file or directory to the Splunk index and then stops monitoring it. This is useful for ingesting static files that do not change or update.

The command takes the following syntax: splunk add one shot -index

The file parameter specifies the path to the file or directory to be indexed. The index parameter specifies the name of the index where the data will be stored. If the index does not exist, Splunk will create it automatically.

Option B is incorrect because the splunk edit monitor command modifies an existing monitor input, which is used for ingesting files or directories that change or update over time. This command does not create a new monitor input, nor does it stop monitoring after indexing.

Option C is incorrect because the splunk add monitor command creates a new monitor input, which is also used for ingesting files or directories that change or update over time. This command does not stop monitoring after indexing.

Option D is incorrect because the splunk edit oneshot command does not exist. There is no such command in the Splunk CLI.

Which valid bucket types are searchable? (select all that apply)

A. Hot buckets

B. Cold buckets

C. Warm buckets

D. Frozen buckets

Explanation:

Splunk organizes indexed data into buckets with different states, each with distinct characteristics:

Hot Buckets (A):

Searchable: Yes

Actively receiving new data.

Stored in the hot database (not yet rolled to warm).

Warm Buckets (C):

Searchable: Yes

Data rolled from hot buckets after meeting size/time thresholds.

Stored in the main index directory.

Cold Buckets (B):

Searchable: Yes

Older data moved from warm due to retention policies.

Stored in colddb (still part of the index).

Frozen Buckets (D):

Not Searchable (Excluded)

Archived data (either deleted or moved to long-term storage).

Requires thawing (manual restoration) to become searchable.

Key Points:

Hot, Warm, and Cold buckets are always searchable by default.

Frozen buckets are excluded from searches unless restored.

Reference:

Splunk Docs: How the indexer stores indexes

Which pathway represents where a network input in Splunk might be found?

A. $SPLUNK HOME/ etc/ apps/ ne two r k/ inputs.conf

B. $SPLUNK HOME/ etc/ apps/ $appName/ local / inputs.conf

C. $SPLUNK HOME/ system/ local /udp.conf

D. $SPLUNK HOME/ var/lib/ splunk/$inputName/homePath/

Explanation:

The correct answer is B. The network input in Splunk might be found in the

$SPLUNK_HOME/etc/apps/$appName/local/inputs.conf file.

A network input is a type of input that monitors data from TCP or UDP ports. To configure a

network input, you need to specify the port number, the connection host, the source, and

the sourcetype in the inputs.conf file.You can also set other optional settings, such as

index, queue, and host_regex1.

The inputs.conf file is a configuration file that contains the settings for different types of inputs, such as files, directories, scripts, network ports, and Windows event logs. The

inputs.conf file can be located in various directories, depending on the scope and priority of

the settings. The most common locations are:

$SPLUNK_HOME/etc/system/default: This directory contains the default settings

for all inputs.You should not modify or copy the files in this directory2.

$SPLUNK_HOME/etc/system/local: This directory contains the custom settings for

all inputs that apply to the entire Splunk instance.The settings in this directory

override the default settings2.

$SPLUNK_HOME/etc/apps/$appName/default: This directory contains the default

settings for all inputs that are specific to an app.You should not modify or copy the

files in this directory2.

$SPLUNK_HOME/etc/apps/$appName/local: This directory contains the custom

settings for all inputs that are specific to an app.The settings in this directory

override the default and system settings2.

Therefore, the best practice is to create or edit the inputs.conf file in the

$SPLUNK_HOME/etc/apps/$appName/local directory, where $appName is the name of

the app that you want to configure the network input for. This way, you can avoid modifying

the default files and ensure that your settings are applied to the specific app.

The other options are incorrect because:

A. There is no network directory under the apps directory. The network input

settings should be in the inputs.conf file, not in a separate directory.

C. There is no udp.conf file in Splunk. The network input settings should be in the

inputs.conf file, not in a separate file. The system directory is not the

recommended location for custom settings, as it affects the entire Splunk instance.

D. The var/lib/splunk directory is where Splunk stores the indexed data, not the

input settings. The homePath setting is used to specify the location of the index

data, not the input data. The inputName is not a valid variable for inputs.conf.

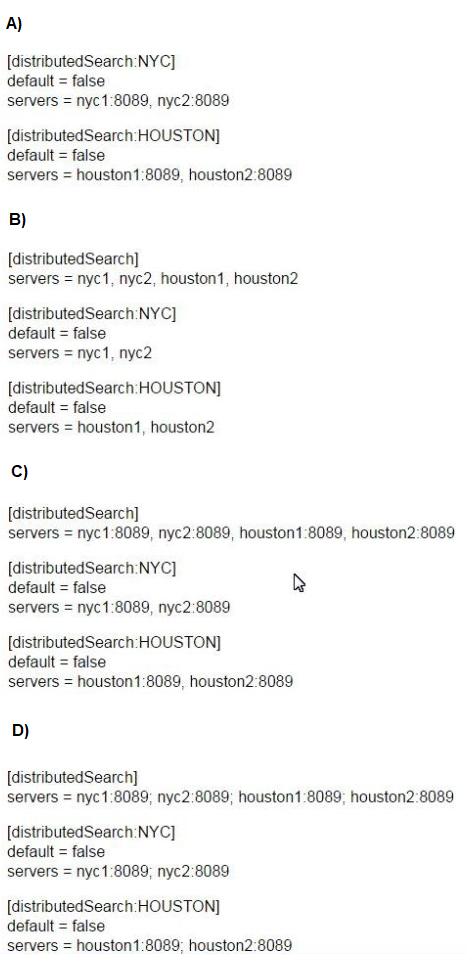

How would you configure your distsearch conf to allow you to run the search below?

sourcetype=access_combined status=200 action=purchase

splunk_setver_group=HOUSTON

A. Option A

B. Option B

C. Option C

D. Option C

Explanation:

The search splunk_server_group=HOUSTON requires a properly configured distsearch.conf with:

Server groups (e.g., [distributedSearch:HOUSTON]) to logically group indexers.

Correct server list format (host:port pairs separated by commas, not semicolons).

No redundancy (Option B omits ports, which can cause connection failures).

Why Option C?

Defines global ([distributedSearch]) and group-specific (HOUSTON, NYC) server lists.

Uses host:port format (e.g., houston1:8089) with comma separators (Splunk’s required syntax).

Matches the search’s splunk_server_group=HOUSTON filter.

Why Not Others?

A: Missing global [distributedSearch] section (limits flexibility).

B: Omits ports (:8089), which may fail if non-default ports are used.

D: Uses semicolons (;) instead of commas (invalid syntax).

Key Takeaway:

Use commas to separate servers and include ports for reliability.

Define both global and group-specific server lists for granular control.

Reference:

Splunk Docs: distsearch.conf

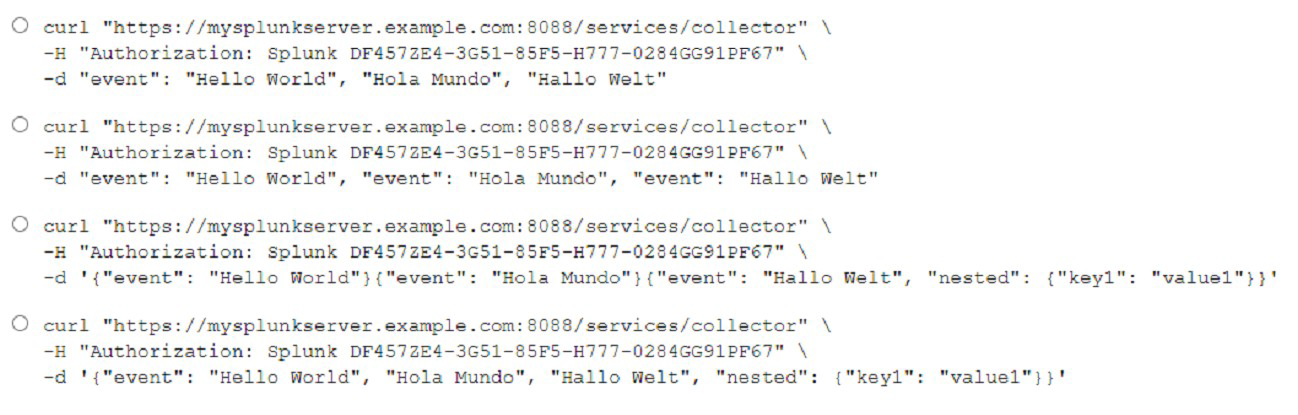

What is the correct curl to send multiple events through HTTP Event Collector?

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

curl “https://mysplunkserver.example.com:8088/services/collector” \ -H

“Authorization: Splunk DF4S7ZE4-3GS1-8SFS-E777-0284GG91PF67” \ -d ‘{“event”: “Hello

World”}, {“event”: “Hola Mundo”}, {“event”: “Hallo Welt”}’.

This is the correct curl command to send multiple events through HTTP Event Collector (HEC), which is a token-based API that allows you to send data to Splunk Enterprise from any application that can make an HTTP request. The command has the following components:

The URL of the HEC endpoint, which consists of the protocol (https), the hostname

or IP address of the Splunk server (mysplunkserver.example.com), the port

number (8088), and the service name (services/collector).

The header that contains the authorization token, which is a unique identifier that

grants access to the HEC endpoint. The token is prefixed with Splunk and

enclosed in quotation marks. The token value (DF4S7ZE4-3GS1-8SFS-E777-

0284GG91PF67) is an example and should be replaced with your own token

value.

The data payload that contains the events to be sent, which are JSON objects

enclosed in curly braces and separated by commas. Each event object has a

mandatory field called event, which contains the raw data to be indexed. The event

value can be a string, a number, a boolean, an array, or another JSON object. In

this case, the event values are strings that say hello in different languages.

A new forwarder has been installed with a manually createddeploymentclient.conf.

What is the next step to enable the communication between the forwarder and the

deployment server?

A. Restart Splunk on the deployment server.

B. Enable the deployment client in Splunk Web under Forwarder Management.

C. Restart Splunk on the deployment client.

D. Wait for up to the time set in thephoneHomeIntervalInSecssetting.

Explanation:

After manually creating the deploymentclient.conf file on a Splunk forwarder, you must restart Splunk on the forwarder (deployment client) for the new configuration to take effect and for it to initiate communication with the deployment server.

🔍 What happens during this process:

The deployment client reads the deploymentclient.conf file upon restart.

This file contains information such as:

The deployment server’s address and port

The client's identification

Upon restart, the client contacts the deployment server and checks in.

From then on, the deployment server can manage this forwarder (e.g., by assigning apps via server classes).

📘 Splunk Docs Reference:

“After creating or modifying the deploymentclient.conf file, you must restart the deployment client for the settings to take effect.”

Source: Splunk Docs – deploymentclient.conf

❌ Why the other options are incorrect:

A. Restarting the deployment server is not required at this stage.

B. There's no need to enable the client in Splunk Web—clients auto-register by checking in.

D. phoneHomeIntervalInSecs determines the next time the client checks in after initial connection, but initial connection requires a restart if config was just added.

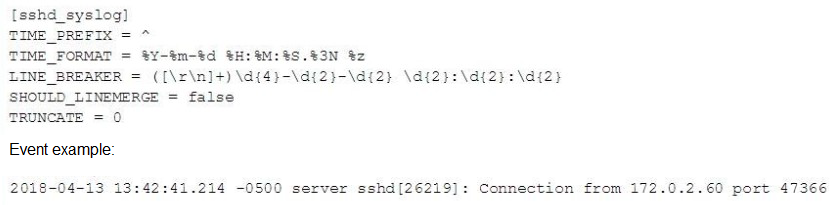

In this source definition the MAX_TIMESTAMP_LOOKHEAD is missing. Which value would fit best?

A. MAX_TIMESTAMP_L0CKAHEAD = 5

B. MAX_TIMESTAMP_LOOKAHEAD - 10

C. MAX_TIMESTAMF_LOOKHEAD = 20

D. MAX TIMESTAMP LOOKAHEAD - 30

Explanation:

MAX_TIMESTAMP_LOOKAHEAD controls how many characters Splunk reads after TIME_PREFIX to attempt to extract the timestamp using the specified TIME_FORMAT.

🔍 Analysis of the Sample Event:

2018-04-13 13:42:41.214 -0500 server sshd[26219]: Connection from 172.0.2.60 port 47366

From the beginning of the line, the timestamp portion is:

2018-04-13 13:42:41.214 -0500

Count the characters:

2018-04-13 → 10 characters

space → 1

13:42:41.214 → 12 characters

space → 1

-0500 → 5 characters

Total: 29 characters

🔧 Why 20 Is a Good Fit:

While the full timestamp is 29 characters, MAX_TIMESTAMP_LOOKAHEAD doesn't always need to cover the entire line—it just needs to capture enough characters after TIME_PREFIX to match TIME_FORMAT.

In this case:

The TIME_PREFIX = ^ (start of line)

TIME_FORMAT = %Y-%m-%d %H:%M:%S.%3N %z — this format roughly consumes 29 characters

So 20 is a commonly safe choice in default configs when the format is known and timestamp is early in the line. However, to fully and safely parse this specific format, a better value might actually be closer to 30.

🧠 Best Practice:

If timestamp is long or positioned later in the line, increase MAX_TIMESTAMP_LOOKAHEAD.

Default value is 128, but if you're optimizing for performance or managing edge parsing, you might tune it down.

📘 Splunk Docs Reference:

MAX_TIMESTAMP_LOOKAHEAD: "Specifies how many characters forward from TIME_PREFIX Splunk software should search for a timestamp."

Source: props.conf spec - Splunk Docs

❌ Why the others are incorrect:

A. 5 — Too short; won't capture even the date.

B. 10 — Still too short.

D. 30 — Technically valid, but more than necessary unless timestamp is deeper in the event. (Would still work, but not the best minimal value.)

| Page 1 out of 19 Pages |