Challenge Yourself with the World's Most Realistic SPLK-2002 Test.

New data has been added to a monitor input file. However, searches only show older data. Which splunkd. log channel would help troubleshoot this issue?

A. Modularlnputs

B. Tailing Processor

C. Chunked LB Processor

D. Archive Processor

Explanation:

When a monitored file receives new data but searches only return older events, the issue is usually related to Splunk’s file monitoring and tailing mechanism. The TailingProcessor component is responsible for detecting file updates, reading new data from monitored files, and forwarding it into the ingestion pipeline. Checking the TailingProcessor logs in splunkd.log helps determine why new data is not being picked up—whether due to CRC issues, file rotation, permissions, or tail position problems.

Correct Option:

B. TailingProcessor

Handles file monitoring, reading, and tailing of new data.

Logs when Splunk starts or stops tailing a file, detects changes, or skips data.

Primary source of troubleshooting information when new data is not being indexed.

Incorrect Options:

A. ModularInputs

Applies only to modular or scripted inputs, not file-based monitor inputs.

Will not show tailing or file-read issues.

C. ChunkedLBProcessor

Related to receiving load-balanced data (e.g., via HTTP Event Collector).

Does not handle local file monitoring and is unrelated to log tailing issues.

D. ArchiveProcessor

Responsible for processing archived files such as .gz files.

Not relevant for live monitored files with newly added data.

Reference:

Splunk Docs: Monitor Inputs and TailingProcessor Behavior

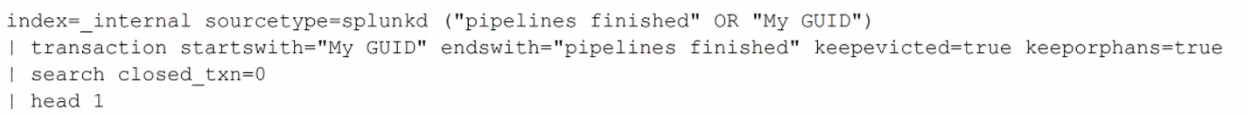

A Splunk instance has crashed, but no crash log was generated. There is an attempt to determine what user activity caused the crash by running the following search:

What does searching for closed_txn=0 do in this search?

A. Filters results to situations where Splunk was started and stopped multiple times.

B. Filters results to situations where Splunk was started and stopped once.

C. Filters results to situations where Splunk was stopped and then immediately restarted.

D. Filters results to situations where Splunk was started, but not stopped.

Explanation:

This search is designed to find incomplete Splunk processes, which can be critical for diagnosing a crash. The transaction command groups events that occur between a "start" event (marked by "My GUID") and an "end" event (marked by "pipelines finished"). The closed_txn field is a metadata field added by the transaction command itself, indicating whether the transaction was properly closed (1) or remains open (0) because an ending event was never found.

Correct Option:

D. Filters results to situations where Splunk was started, but not stopped.

This is the correct interpretation. A closed_txn=0 means the transaction is open—the process started (signaled by "My GUID") but never finished (no corresponding "pipelines finished" event was logged before the instance crashed). This directly points to the user activity that was in progress at the moment of the crash.

Incorrect Options:

A. Filters results to situations where Splunk was started and stopped multiple times.

A transaction that has been started and stopped would be a closed transaction (closed_txn=1). The search is looking for the opposite condition.

B. Filters results to situations where Splunk was started and stopped once.

Similar to option A, a single, clean start and stop cycle would result in a closed transaction (closed_txn=1), which is explicitly filtered out by this search.

C. Filters results to situations where Splunk was stopped and then immediately restarted.

This scenario would likely create multiple separate transactions (one closed, one newly opened). The search filter closed_txn=0 specifically isolates only the open, incomplete transaction that was active at the time of the crash, not the sequence of stops and starts.

Reference:

Splunk Enterprise Search Manual: "Transaction command". The documentation for the transaction command explains that the closed_txn field is an internal field that is set to "0" for transactions that are left open (missing an end event) and "1" for transactions that are successfully closed. This is a standard field used to identify incomplete sequences of events.

Which of the following is true regarding the migration of an index cluster from single-site to multi-site?

A. Multi-site policies will apply to all data in the indexer cluster.

B. All peer nodes must be running the same version of Splunk.

C. Existing single-site attributes must be removed.

D. Single-site buckets cannot be converted to multi-site buckets.

Explanation:

The migration from a single-site to a multi-site indexer cluster involves defining new, site-aware replication policies. To handle the existing single-site data, the Cluster Manager (Master Node) needs to temporarily keep track of the old single-site attributes like replication_factor and search_factor in addition to the new multi-site attributes like site_replication_factor and site_search_factor.

The migration process involves:

Setting the new multi-site attributes (-multisite true, -available_sites, etc.) on the Cluster Manager and peer nodes.

The Cluster Manager uses the old single-site attributes to manage the existing (migrated) single-site buckets.

Once all existing single-site buckets have aged out according to their retention policy (or have been manually re-indexed/exported/re-indexed as true multi-site buckets), the Cluster Manager is no longer managing them.

At this point, the old single-site attributes must be removed from the Cluster Manager configuration, as they are now redundant and only apply to single-site buckets that no longer exist.

Therefore, the statement is true in the context of the final, clean state of the multi-site cluster after all old data has been managed.

Analyzing Other Options:

A. Multi-site policies will apply to all data in the indexer cluster.

False. The new multi-site policies (like site_replication_factor) only apply to new data indexed after the migration. Existing single-site buckets are managed using the old single-site replication policy until they age out.

B. All peer nodes must be running the same version of Splunk.

True, but this is a universal rule for any indexer cluster (single-site or multi-site) and is not a unique requirement or truth regarding the migration specifically. It must be true before, during, and after the migration.

D. Single-site buckets cannot be converted to multi-site buckets.

False (or at least misleading). While the migration process automatically tags single-site buckets with a site of origin, the core truth is that single-site buckets do not replicate across site boundaries according to the new multi-site rules. To get true multi-site bucket behavior (replicating to specific sites per the site_replication_factor), the old data must be re-indexed into the new multi-site cluster, or you must wait for the old data to roll out. The original statement is technically false because they are converted in the sense that they are tagged with a site, but they do not gain the full multi-site replication behavior without re-indexing.

Which Splunk internal field can confirm duplicate event issues from failed file monitoring?

A. _time

B. _indextime

C. _index_latest

D. latest

Explanation:

Duplicate events can occur if a file monitor input fails to properly update its checkpoint, causing it to re-read a file from the beginning after a restart. To confirm this, you need a field that can distinguish between the original event and the duplicate. While _time reflects the event's timestamp, _indextime records when Splunk actually indexed the event, which is key for identifying duplicates that were indexed at different times.

Correct Option:

B. _indextime:

This internal field records the timestamp of when the event was processed and indexed by Splunk. If a file is re-read due to a checkpointing failure, the original events and the duplicate events will have identical _time values but different _indextime values. Searching for events with the same _time and source but different _indextime is a reliable method to confirm this specific type of duplication.

Incorrect Options:

A. _time:

This field represents the timestamp extracted from the event data itself. For duplicates originating from the same log file, the _time value will be identical for both the original and the re-ingested event, making it useless for distinguishing between them.

C. _index_latest:

This is not a standard, documented Splunk internal field. It appears to be a distractor.

D. latest:

This is not a standard Splunk internal field used for event metadata. It might be a custom field created in a specific environment but has no relevance for identifying system-generated duplicates.

Reference:

Splunk Enterprise Admin Manual: "Default fields". The documentation for _indextime states it is the "time that the event was indexed by Splunk." This is the definitive field to use when investigating issues related to event ingestion timing, such as duplicate events caused by input failures or checkpoint resets.

When designing the number and size of indexes, which of the following considerations should be applied?

A. Expected daily ingest volume, access controls, number of concurrent users

B. Number of installed apps, expected daily ingest volume, data retention time policies

C. Data retention time policies, number of installed apps, access controls

D. Expected daily ingest volumes, data retention time policies, access controls

Explanation:

When designing a robust and scalable Splunk environment, the structure of the indexes is paramount. Index design directly influences performance, cost (storage), and security.

Sizing (Number and Size): This is driven by the Expected Daily Ingest Volumes (determines how fast indexes fill) and Data Retention Time Policies (determines how long indexes must exist and the total storage needed).

Number of Indexes: This is driven by Access Controls and differing Retention Policies. If different data types have different security requirements or need to be kept for different lengths of time, they must be separated into different indexes.

Correct Option:

D. Expected daily ingest volumes, data retention time policies, access controls

Expected Daily Ingest Volumes: This dictates the size of the indexer tier (how many indexers are needed) and the hot/warm storage requirements. It defines the maximum rate at which data flows into the indexes.

Data Retention Time Policies: This dictates the index lifecycle and total storage capacity (hot/warm/cold) needed. For example, security logs might require 365 days of retention, while operating system logs might only need 30 days, necessitating separate indexes for cost management.

Access Controls: This dictates the number of indexes. Different data sets must be placed into separate indexes if they require different Role-Based Access Control (RBAC) permissions. For example, sensitive HR data must be in a separate index from general IT logs so that a non-privileged user cannot search the HR index.

Incorrect Options:

A. Expected daily ingest volume, access controls, number of concurrent users

Number of concurrent users affects Search Head and Indexer CPU/RAM sizing (search performance), but it is a search-time consideration, not a factor that directly dictates the number of indexes or their storage size/retention.

B. Number of installed apps, expected daily ingest volume, data retention time policies

The Number of installed apps affects the Search Head configuration and deployment strategy (e.g., using a Deployer/SHC), but it does not directly determine how many indexes are required or how large they must be.

C. Data retention time policies, number of installed apps, access controls

This misses the critical factor of Expected Daily Ingest Volumes, which is the primary metric for calculating the size of the indexes and the necessary storage hardware. The number of installed apps is irrelevant to index sizing.

Reference:

Splunk Documentation: Design considerations for indexes and Managing Index Storage Concept: Index Planning and Sizing (Separation by security and retention is a best practice).

A customer is migrating 500 Universal Forwarders from an old deployment server to a new deployment server, with a different DNS name. The new deployment server is configured and running.

The old deployment server deployed an app containing an updated deploymentclient.conf file to all forwarders, pointing them to the new deployment server. The app was successfully deployed to all 500 forwarders.

Why would all of the forwarders still be phoning home to the old deployment server?

A. There is a version mismatch between the forwarders and the new deployment server.

B. The new deployment server is not accepting connections from the forwarders.

C. The forwarders are configured to use the old deployment server in $SPLUNK_HOME/etc/system/local.

D. The pass4SymmKey is the same on the new deployment server and the forwarders.

Explanation:

When a deployment client receives an app from a deployment server, that app's configuration files are stored in $SPLUNK_HOME/etc/apps/. However, the deployment client's own configuration, which specifies which server to phone home to, is defined in deploymentclient.conf within the $SPLUNK_HOME/etc/system/local/ directory. Configuration files in the system/local directory have the highest precedence and will override settings deployed via apps. Therefore, the local setting pointing to the old server is still in control.

Correct Option:

C. The forwarders are configured to use the old deployment server in $SPLUNK_HOME/etc/system/local.

This is the most likely cause. The local deploymentclient.conf file has a higher precedence than any app-deployed version of the same file. Even though the new app with the updated configuration was deployed successfully, the original local configuration is still active, causing the forwarders to continue checking in with the old deployment server.

Incorrect Options:

A. There is a version mismatch between the forwarders and the new deployment server.

While a significant version mismatch could cause issues, it would typically prevent communication entirely or cause failures, not result in the forwarders persistently phoning home to the old server. The described behavior is a configuration precedence issue.

B. The new deployment server is not accepting connections from the forwarders.

If the new server were refusing connections, the forwarders would log connection errors and likely revert to phoning home to the old server as a fallback if it was still configured. However, the problem states the forwarders are successfully and continually phoning home to the old server, indicating their configuration still explicitly points there.

D. The pass4SymmKey is the same on the new deployment server and the forwarders.

The pass4SymmKey must match for secure communication to occur. If it were different, the forwarders would be unable to authenticate to the new server, but they would not automatically return to the old one unless it was still specified in their active configuration.

Reference:

Splunk Enterprise Admin Manual: "Configuration file precedence". The documentation details that configuration files in etc/system/local/ have the highest precedence and will override settings in deployed apps. To change the deployment server, the deploymentclient.conf in system/local must be updated directly or overridden by a higher-precedence file, which is not the case here.

When troubleshooting a situation where some files within a directory are not being indexed, the ignored files are discovered to have long headers. What is the first thing that should be added to inputs.conf?

A. Decrease the value of initCrcLength.

B. Add a crcSalt= attribute.

C. Increase the value of initCrcLength.

D. Add a crcSalt= attribute.

Explanation:

When files are not being indexed and investigation reveals that they contain long headers, the likely issue is that Splunk’s CRC calculation (used to detect if a file is new or already processed) is only reading the first part of the file. The parameter initCrcLength controls how many bytes Splunk reads from the beginning of a file to compute its CRC. If the header is very long, Splunk may incorrectly think multiple files are identical. Increasing initCrcLength is the correct first step.

Correct Option:

C. Increase the value of initCrcLength

Ensures Splunk reads more of the file header when calculating CRC.

Prevents different files with similar long headers from being incorrectly treated as duplicates.

Common fix when files are skipped because Splunk thinks they were already indexed.

Incorrect Options:

A. Decrease the value of initCrcLength

Reduces the CRC scan size, making the duplicate detection problem worse, not better.

Would cause more files with identical header beginnings to be skipped.

B. Add a crcSalt= attribute

Useful when filenames or paths need to be included in CRC calculation, but this is not the first step for long-header issues.

Does not solve the underlying problem of Splunk not reading enough of the header.

D. Add a crcSalt= attribute

Duplicate option; same reasoning as Option B.

Helpful only in cases of identical file content from different locations—not long header issues.

Reference:

Splunk Docs: Configure CRC Checking and initCrcLength

When using ingest-based licensing, what Splunk role requires the license manager to scale?

A. Search peers

B. Search heads

C. There are no roles that require the license manager to scale

D. Deployment clients

Explanation:

The License Manager (formerly License Master) is primarily a repository for license files and a reporting server that tracks daily indexing volume reported by its peers (Indexers/Search Peers). In an ingest-based licensing model, its function is reporting and enforcement, not active indexing or searching. As its workload is generally low (receiving periodic reports from license peers, processing and summarizing that usage data), it is typically not a component that requires horizontal scaling in a large environment. It can often be co-located with other management components like the Monitoring Console or Indexer Cluster Manager.

Correct Option:

C. There are no roles that require the license manager to scale

Primary Function: The License Manager's main job is to aggregate daily indexing usage data (GB/day) from its peers and check this against the total license quota.

Low Resource Needs: This task is generally low-impact on system resources compared to indexing or searching.

Scaling: While you should ensure its underlying hardware is appropriately sized to handle the reports from all its license peers (especially in very large environments), the License Manager is not typically a horizontally scalable role like Search Peers or Search Heads; you do not run multiple License Managers reporting to each other to increase capacity for the licensing function itself.

Incorrect Options:

A. Search peers

Scaling Need: Search Peers (Indexers) are the components that actually perform the indexing (ingestion). They need to scale (add more nodes) to handle increasing ingestion volume, but they report their usage to the License Manager; they don't require the License Manager itself to scale.

B. Search heads

Scaling Need: Search Heads require scaling (Search Head Cluster) to handle a high volume of concurrent users and searches. While they consume a feature license from the License Manager, they do not perform the metered indexing that defines ingest-based licensing and do not drive the License Manager's scale requirements.

D. Deployment clients

Scaling Need: Deployment Clients (Forwarders, Indexers) scale with the number of machines in the environment. They do not report licensing usage (Universal Forwarders) or drive the License Manager's scale directly, although indexers are license peers.

Reference:

Splunk Documentation: Configure a license manager

The master node distributes configuration bundles to peer nodes. Which directory peer nodes receive the bundles?

A. apps

B. deployment-apps

C. slave-apps

D. master-apps

Explanation:

In an indexer cluster, the master node (cluster manager) is responsible for distributing configuration bundles to all peer nodes. To maintain a clear separation between locally managed apps and those controlled by the cluster manager, Splunk uses a specific directory on the peers to receive these bundles. This prevents conflicts and allows the cluster to enforce configuration consistency across all nodes.

Correct Option:

C. slave-apps:

This is the correct historical and technical directory name. When the cluster manager pushes a configuration bundle to a peer node, the peer receives it and stores it in the $SPLUNK_HOME/etc/slave-apps/ directory. The peer then uses the contents of this directory to update its active configurations in the etc/apps directory. The use of "slave" denotes that the node receives its configuration from a master.

Incorrect Options:

A. apps:

This is the standard directory for active applications and configurations on any Splunk instance. However, the bundle is not pushed directly here by the manager. The peer node's internal process copies and merges the contents from slave-apps into its local apps directory. The manager's target is slave-apps.

B. deployment-apps:

This directory is associated with a deployment server, which is used to manage forwarders and other universal forwarders. It is not the directory used by an indexer cluster's master node for distributing bundles to peer nodes.

D. master-apps:

This directory is found on the cluster manager itself. The manager stores the apps and configurations to be bundled in its etc/master-apps directory. It does not exist on the peer nodes.

Reference:

Splunk Enterprise Admin Manual: "How the cluster manager distributes configuration bundles". The documentation specifies that the cluster manager distributes configuration bundles from its etc/master-apps directory to the etc/slave-apps directory on each peer node. The peer then applies the configurations from slave-apps to its runtime environment.

metrics. log is stored in which index?

A. main

B. _telemetry

C. _internal

D. _introspection

Explanation:

The metrics.log file is one of Splunk’s core internal logs. It captures performance-related metrics such as pipeline activity, queue sizes, and indexing performance. All Splunk internal logs, including metrics.log, are indexed into the _internal index. This index is used by Splunk administrators to monitor system health, performance, and operational behavior.

Correct Option:

C. _internal

All Splunk internal operational logs, including metrics.log, splunkd.log, and others, are stored here.

Used for monitoring Splunk performance, indexing pipelines, and queue behavior.

Essential for troubleshooting and building health dashboards.

Incorrect Options:

A. main

This is the default index for user-ingested data.

Splunk does not store any internal operational logs here.

B. _telemetry

Stores Splunk usage and product telemetry when enabled, not system operational logs.

Does not contain metrics.log.

D. _introspection

Contains system-level introspection data such as CPU, memory, disk stats.

Different from metrics.log and does not store Splunk internal operational logs.

Reference:

Splunk Docs: Internal Logs and the _internal Index

| Page 3 out of 16 Pages |

| Splunk SPLK-2002 Dumps Home | Previous |